Fictions and simulations: The case for idealism

Reading | Philosophy | 2022-05-22

A new, creative and compelling argument—even a new type of argument—for idealism is elaborated upon in this long-form essay, which is fluid and easy to read.

The matter with matter

I was brought up in a universe that, according to the metaphysical paradigm I was unconsciously spoon-fed, is nothing more than a collection of things: “matter” for short, although it also includes space, time, physical fields, etc.

The assumption is that matter is devoid of qualities such as value, beauty, meaning, etc. The qualitative aspects of reality are dismissed as being either an illusion, or an emergent placeholder to refer to very complicated physical patterns, just as a planet’s center of gravity is a placeholder for the entire planet when describing its orbit.

The gist of it is, as Steven Weinberg perfectly said: “The more the universe seems comprehensible, the more it also seems pointless.”1

However, careful observation of any human culture that has ever existed reveals that our deepest intuitions and our behaviors clash with such a paradigm, even in our current materialist environment.2 As a matter of fact, even if materialists preach the gospel of a pointless world and may even adhere intellectually to such an idea, they still act as if their lives have meaning.3

Yuval Noah Harari4 would contest that human culture is just a bunch of fictions, many of which are extremely ugly, and that we would be better off if we left all such stories behind, especially those that aim to give their believers a sense of meaning. Yet one could argue that the portrayal of fictions as pointless, truthless brain viruses obeying meme mechanics is, in itself, a stupendous piece of fiction. Perhaps the cure for ugly fiction is not no-fiction, but better fiction.

This is, I must admit, a hard sell these days, when attempting to navigate outside the soupy waters of materialism seems either impossible or deluded. After all, we have a fridge, therefore materialism is true.Nevertheless, in this brief essay I suggest this is not the case, and that we have good reasons to think that it is not consciousness that comes out of matter, but the other way round: consciousness comes first, in a paradigm known as idealism.5

I shall first argue the case for materialism as proposed by some of its more sophisticated defenders, drawing inspiration from books such as Sean Carroll’s6 and Carlo Rovelli’s.7 Two arguments —one gnoseological, the other ontological— for consciousness will follow in abbreviated form, as well as the consequences that derive from them, and a few concluding remarks that have personally enriched my own experience.

The case for matter

The regularities we perceive around us prod us into formulating explanatory models. Most useful among these is the imagining of a thing called ‘matter’ that obeys certain physical laws. Even if this matter cannot explain consciousness (see the ‘hard problem of consciousness’) it can still ostensibly explain all physical phenomena, something that intuitively leads us to believe that matter really exists outside and independently of consciousness. Perhaps even more importantly, postulating the existence of consciousness and subjective experience does not improve the explanatory power of the concept of ‘matter.’ Therefore, Occam’s razor suggests that matter is definitely outside and independent of consciousness. In this regard most opinions fall in mainly two camps:

- Consciousness does not exist at all, it is an illusion;

- Consciousness exists but is secondary: it emerges from material patterns.

Most materialists adhere to the second option, which has its intellectual appeal. The implicit metaphysical paradigm behind it is called ‘causal structuralism,’ according to which things exist only insofar as they participate in defining other elements in the big web of things. This web is parsimonious: nodes that have no links to other nodes are not admitted, and so disconnection is synonymous with non-existence. Things have no consistency of their own: each is defined as the set of links it has with other things.

The case for consciousness: gnoseological argument

By ‘consciousness’ here we refer to phenomenal consciousness, the ‘space’ that, in a screen-like fashion, may be filled with many different experiential contents. We intuitively divide these into two main groups:

- Those we identify with: emotions, sensations, feelings, thoughts;

- Those we do not identify with: perceptions.

This criterion is based on whether we feel we can control/create such contents. However, this feeling is fluid. Control often escapes us when it comes to negative emotions, earworm songs or unpredictable thoughts that seem to obey their own independent flow.

At the same time, and as mystics of all times can confirm, the opposite may happen, and then we identify with all the contents of consciousness. In this case, the point is not about control (or lack thereof) over such contents, but rather on identifying with the ‘screen’ within which they unfold.8

Nevertheless, we can also classify the contents of consciousness in other ways, such as:

- Conceptual knowledge, which is indirect or non-immediate, populated by conceptual entities.

- Non-conceptual knowledge, which is direct or immediate, populated by qualia.

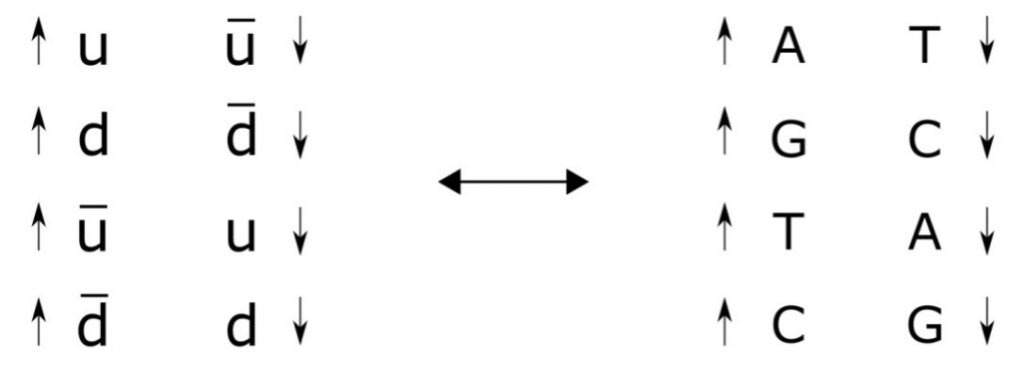

Conceptual entities are always defined in relation to something else: in a dictionary every word/concept is defined in terms of other words/concepts, weaving and knotting a network where each node is connected to a set of other nodes that define it. Each concept is explainable in terms of other concepts (or qualia): thus, the conceptual realm is the realm of reduction, i.e. of causal structuralism. It is also the realm where ‘matter’ lives and, by extension, all physical entities as well.

Let’s take light as an example: whatever physical light is, it is not what we perceive as light. When a stream of photons hits the retina, it does not pierce our skull and illuminate its interior. Instead, it becomes a train of electrical impulses that is correlated with, yet different from, physical light. Photons are concepts we have imagined in order to model certain kinds of perceptual regularities, and they work beautifully, but no one has actually perceived one in a direct way. Strangely enough (from a materialistic perspective, that is), we perceive light in our nightly dreams, although our eyes are closed and thus correlation with any physical ‘reality out there’ is impossible.

Unfortunately, causal structuralism forgets that many things exist outside the conceptual realm, such as the qualities of experience, or ‘qualia.’ Qualia are irreducible: they exist by themselves, and thus cannot be reduced or defined in relation to others. Someone who has never seen red cannot know it by seeing green or blue (i.e. other qualia) or by knowing the frequency of its correlated, physical, electromagnetic wave (a concept). Whereas concepts may be fully grasped based on other concepts, this is not the case with qualia: the only way of truly knowing the taste of a mango is by eating one.

The existence of qualia is different to that of concepts. ‘Existence’ comes from Latin ex– (out) + –sistere (to stand): an entity exists when it stands forth against the background of other entities. In order to exist, concepts lean on other concepts and/or qualia, whereas qualia stand forth (i.e. exist) by themselves, a property that scholastic philosophy used to call perseitas or ‘perseity.’

Now a materialist might say: well, alright, we might conceive physical light as a concept, not standing forth by itself, but maybe this is just our cognitive fault and in reality physical light is a non-conceptual entity. To this, an idealist can quickly respond: sure, why not; however, if physical light is non-conceptual, then it can only be in the camp of qualia. There is no escaping that either something stands forth by itself (and we refer to it as quale) or it stands forth through others different from itself (and we refer to it as concept): tertium non datur.

Qualia stand directly on the ground of being, while concepts are built above this surface. Yet conceptual networks cannot be entirely suspended in midair: at least some concepts must act as anchors that have a one-to-one association with direct, immediate experiences, i.e. qualia. If a language must be intelligible, then a minimum percentage of its vocabulary must refer to qualia. Many of the logical, ontological and ethical paradoxes we humans have stumbled upon are rooted in our willingness to imagine a groundless language (i.e. conceptual system), afloat and unmoored, disconnected from the ground of qualities.

In this light, the hard problem of consciousness can be seen as the unsolvable consequence of our misguided attempts to reverse the ontological order, trying to ground qualities in concepts. Needless to say, this does not work. As qualia are the ontological basis of concepts, consciousness has ontological priority over matter. The world is exactly what it seems to be: a qualitative phenomenon unfolding in consciousness.9

The case for consciousness: ontological argument

We can formulate a second argument in favor of consciousness that stems from the world of fiction-making, where entire conceptual universes (with their own internal structures, natural laws and inhabitants) may spring into being in the form of novels, video games, films or role-playing games. In some cases we even create nested universes, such as in Michael Ende’s The Neverending Story or in the film The Thirteenth Floor, where virtual realities are simulated within virtual realities and so on and so forth.

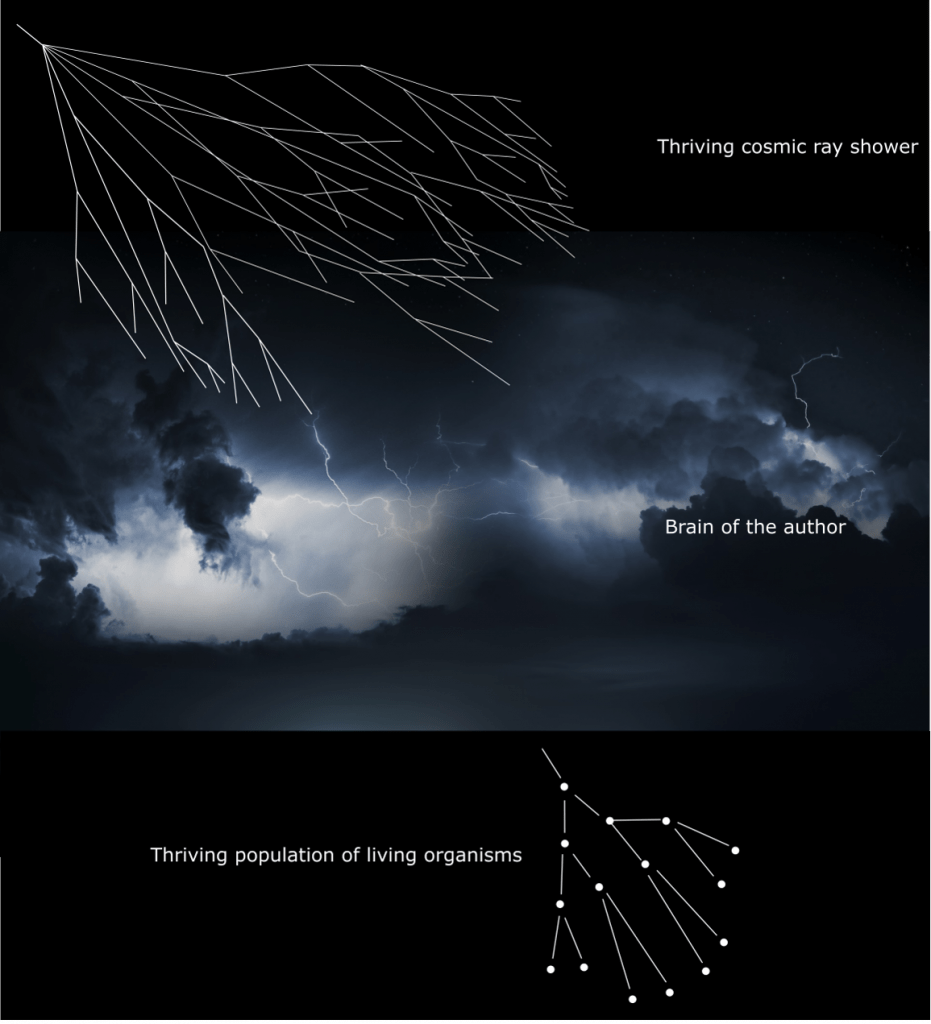

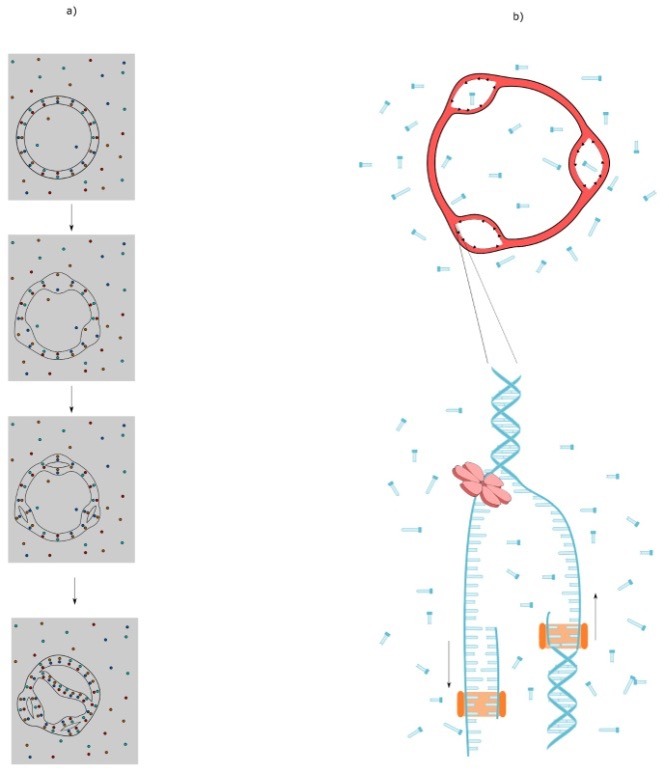

Let us, for the sake of brevity, refer to such processes as simulations; in every simulation there are three realities at play:

Reality A: the simulated reality;

Reality B: the reality where the simulation runs, and

Reality C: the reality underlying (grounding) the first two.

It so happens that reality C always coincides with reality B, and can never coincide with reality A. This means that agents inside B and C can interact directly with each other, but agents living in A cannot interact directly with those of B. We say that reality A does not have being-in-itself; that is, it does not exist in itself; rather, it exists in(side) another (reality B-C). Reality A is an extrinsic appearance of reality B: entities in reality A are symbols of entities in reality B. The property of existing-in-itself is called inseitas in Latin.

If we apply this reasoning to a landscape painting, we have:

Reality A: the mountains, valleys, rivers and sheep depicted;

Reality B: the canvas and the oils with which the painting is made;

Reality C: the universe in which the painter lives.

Clearly the painter (reality C) belongs in the same universe as the canvas and oil paints (reality B). The painter is not a character in the painting (reality A does not coincide with reality B) and cannot interact with the sheep painted on the canvas.

The nature of the simulation is always heavily dependent on the means available in the reality where the simulation takes place, and these may change over time: canvas and paint have been around for centuries, whereas computers are a recent development. If we take a video game as another example, we have:

Reality A: the race cars simulated in the video game;

Reality B: the hardware in which the video game software runs;

Reality C: the universe in which the software engineer lives, as well as those who produced the hardware.

The electronic engineer (reality C) belongs in the same universe as the hardware (reality B), yet the engineer does not coincide with an avatar inside the video game (if the avatar dies, the engineer does not die).

In a book or movie, such as Lord of the Rings, we have:

Reality A: Frodo ‘lives’ inside the movie and is subject to (apparent) causal relationships with other entities in the movie (Sauron might kill him);

Reality B: the TV screen where you are watching the film;

Reality C: the universe in which the viewer watching the film lives.

The viewer (reality C) shares the same universe with the TV screen (reality B), but neither of them can be destroyed by Sauron, whereas Frodo can (as both are in reality A).

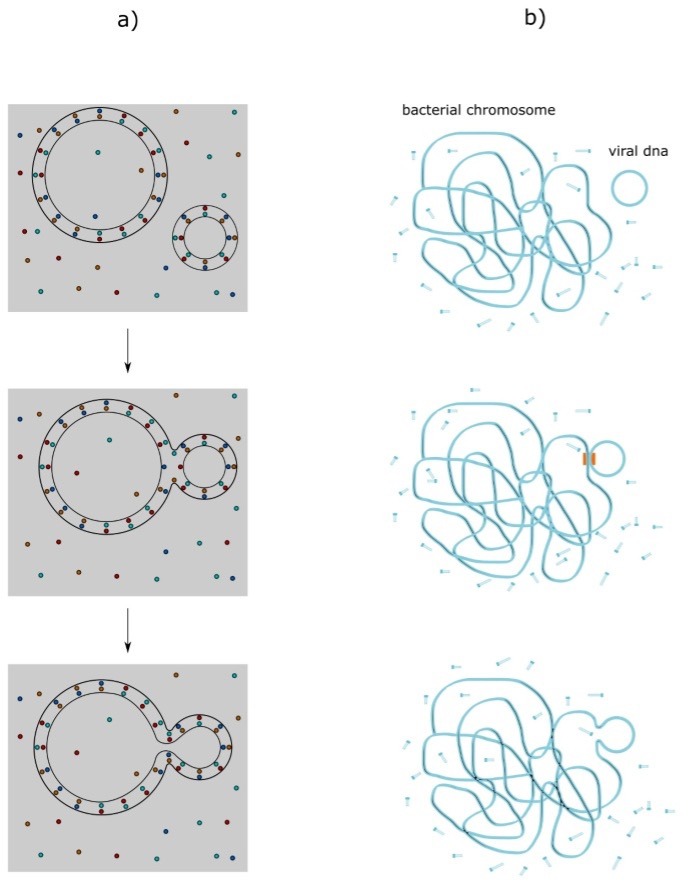

Now let’s apply the same kind of reasoning to conscious perception:

Reality A: the world I seem to perceive and be immersed in, a world I interpret to be made up of matter, energy, space, time, fields, and which is completely describable in quantitative terms.

Reality B: my consciousness, which contains my perceptions, sensations, emotions; ultimately a world entirely populated by qualitative entities.

Reality C: the ground of being.

It follows that the ground of being (reality C) and my consciousness (reality B) belong to the same kind of reality, made up of qualitative stuff. If we could peek outside of our own consciousness, we would find no matter: only consciousness itself, the reality within which the ‘matter simulation’ ultimately exists. Matter has not inseitas; rather, it has its being (its existence) in consciousness. Matter is an extrinsic appearance of consciousness.

How matter and consciousness interact

After having spoken in favor of the primacy of consciousness we can re-examine the argument in favor of matter.

The gnoseological argument tells us that matter, as a concept, needs to be grounded on a qualitative substratum, otherwise it remains suspended in air. The ontological argument tells us that, within physical reality, matter can perfectly explain various phenomena without resorting to consciousness. Matter is a coherent conceptual model that lives in a type A reality, and within such reality it does not need to postulate consciousness. Matter is like a well-built video game universe narrative, where the rules of the game form a closed referential system: they need not refer to something outside their universe. This is why it is problematic to study consciousness (fist person perspective, B reality) from a scientific (third person perspective) A reality.

However, matter (an A reality) cannot be grounded in itself. As the reality that underlies matter has a qualitative nature, consciousness must come before matter. Following the ontological argument, one may wonder: could the reality of consciousness be itself a simulation (that is, a type A reality within an even more fundamental reality)? Has consciousness inseitas?

The gnoseological argument suggests it is not the case, because grafted realities can only be populated with ‘concepts’ ultimately grounded in qualia, whereas qualia are, by definition, grounded in themselves. Metaphysically, we can say that, since the contents of consciousness (qualia) have perseitas, then consciousness has inseitas. Therefore, consciousness is the ground of being.

Another intriguing line of reasoning focuses on what happens at the borders of a type A reality. In a well-designed reality A, no strange behaviors should be noticed; nothing that violates its internal rules; not a single glimpse into reality B. A suggestive hypothesis to explain the spooky behaviors of our physical reality, such as quantum mechanics or paranormal phenomena, is that these happen in proximity to the limits of the ‘simulation.’

The one and the many

If consciousness is not an afterthought sprung by accident from a material universe—if it truly is the ground of being—our curiosity impels us to ask questions such as: how is it that there are so many ‘individual consciousnesses’ and so many qualia? What are the dynamics of consciousness?

If we focus on how entities interact among themselves, we may notice the following:

- A sum of conceptual entities is extensive, i.e. one comes next to the other (let’s call them ‘things,’ put side by side).

- A sum of qualia, on the other hand, is intensive, i.e. one is inside the other (we shall call them ‘processes’).

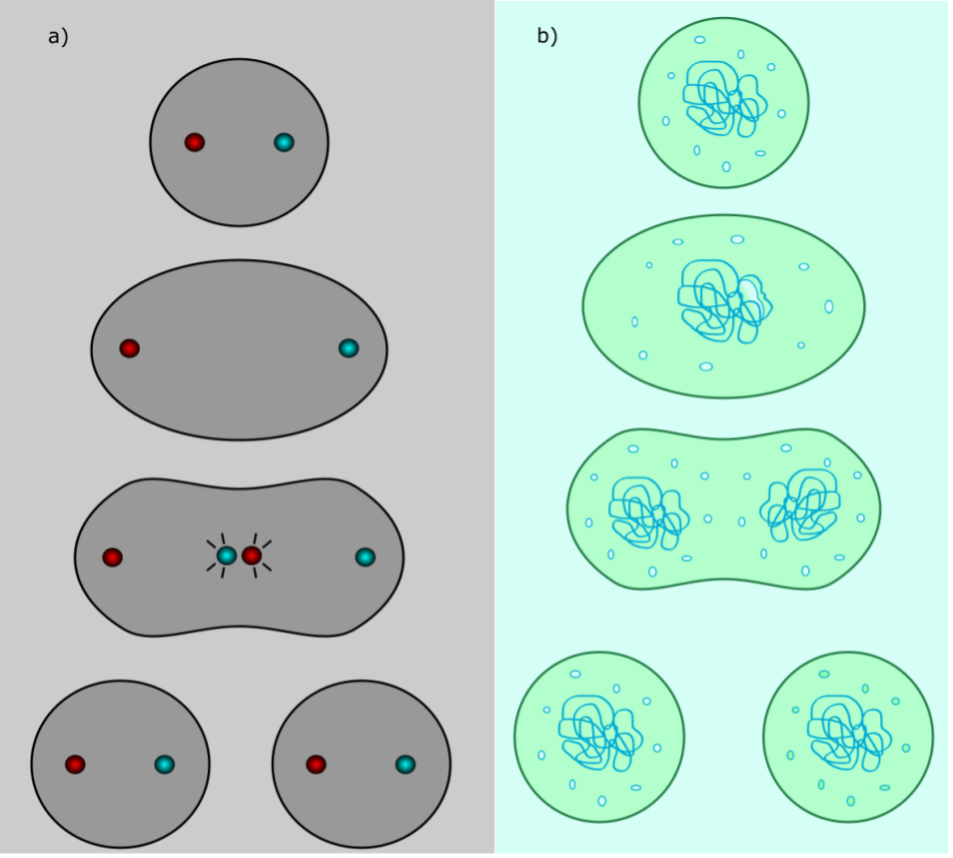

Conceptual entities as things are static, whereas qualia are processes because they are alive. Once subdivided, things cannot be reunited into wholeness: no matter how carefully you glue them together, a pile of eggshells cannot go back to being an egg. On the other hand, processes have no boundaries and may interpenetrate each other, sometimes as far as reaching a coincidentia oppositorum: they can be one and many at the same time. Consciousness is one process and at the same time many processes.

Let me illustrate this by going back to direct experience: a music concert I attended last week. If time had stopped while I was listening to the symphony, the musical experience itself would have disappeared. All qualia are experienced in the process of one transforming into another, and the transformation itself is a quale. In fact, our perception of time does not correspond to a sequence of discrete points with no depth, but always as a continuum that has duration: experienced time has a certain thickness to it. The idea of physical time as a necklace of microscopic pearls where every instant is an individual bead is a useful conceptual abstraction built upon, yet profoundly different from, our qualitative experience of time, in which being and becoming are one and the same.10

Qualitative, metaphysical time is a fundamental aspect of consciousness, without which certain perceptions, such as that of movement, make no sense. The sum of qualia, in which they interpenetrate each other like fluids, can only happen in time that has thickness. When we experience a moment of ecstasy, of beauty or union, the perception of time slows down—or, conversely, its thickness swells, thus intensifying our qualitative perception.

Interestingly enough, in extensive sums the addends (things) disappear and are no longer distinguishable: 10 can be both the result of 8+2 and of 5+5. However, in intensive sums the addends (processes) do not disappear, but live more intensely within the bigger process that includes them.

Going back to the example of music, a bunch of notes played separately are distinct qualia. Yet, if we play them together, we will have an intensive addition, in which the ‘individual’ notes continue to exist within a new process: a symphony. The same notes, once enfolded into the symphony, seem transfigured because we perceive them interpenetrating.

We, amateurs that know little about music, have a limited ability to appreciate it, as everything appears indistinct to us. However, if we devote ourselves to studying and understanding how music works, how to compose or play musical pieces, the indistinct becomes distinct: we learn to perceive the individual notes. At this point, next time we listen to a symphony we will perceive the interpenetration, the union in the distinction of all notes and the experience will be more intense. Unlike things, qualia can increase in intensity if you break them down and put them back together again. This is the dynamic of qualia that is implemented in each single conscious being: each single eye of the universal consciousness.

Thus considered, life may be seen as a creative, never-ending process—an infinite game—of learning how to perceive the distinctness in the indistinct, only to let qualia flow back and merge together, intensifying their combined tastes like so many ingredients in a well-made recipe.

Indeed, such a soup might be true food for the soul.

Bibliography

- Steven Weinberg (1993). Dreams of a Final Theory.

- Joseph Campbell (2008). The Hero with a Thousand Faces.

- Viktor E. Frankl (2008). Man’s Search For Meaning.

- Yuval Noah Harari (2018). 21 Lessons for the 21st Century.

- Bernardo Kastrup (2019). The Idea of the World.

- Sean Carroll (2016). The Big Picture.

- Carlo Rovelli (2021).

- Rupert Spira (2017). The Nature of Consciousness.

- Bernardo Kastrup (2015). Brief Peeks Beyond.

- Iain McGilchrist (2021). The Matter with Things.